Parts of this write up are from a blog post I made for the Syracuse innovation team that is hosted on their blog

Timeline:

The creation of the mapping software took the form of three parts: Planning, Development, and Deployment. Planning was a 3.5 month process, while Development took 2.5 months. The following post details the first iteration of the program.

The proof of concept had 2 key parts:

-

Operate in near real time: showing streets being updated in 1 to 10 minute intervals. This posed the challenge of requiring specialized software to operate a geofencing server. The server would store geofences and be able to associate actions with them (i.e., if a truck enters the area, send a message with truck number).

-

The application had to be light on the user’s browser. Initial testing produced 10-20 megabyte web pages that would throw a “Page Unresponsive” error (Chrome, Firefox, Edge) on computers. This problem would be solved through using minified JSON files (as small as a JSON file can get) as data sources for a lightweight client to load and then store into memory, reducing the resources required for initial access.

Planning:

Planning was a two part process: Assessment and GIS research. Assessment started at understanding the capabilities of the Application Programming Interface (APIs) to which I had access. The first of those was our vehicle tracking API. The vehicle tracking API returns City vehicle locations as GPS coordinates: latitude, longitude. Translating those coordinates into a city street block is a two step problem. Using geofences, a polygon of coordinates that have an action attached to them, I was able to generate a geofence per city block and track when a vehicle encountered that fence.

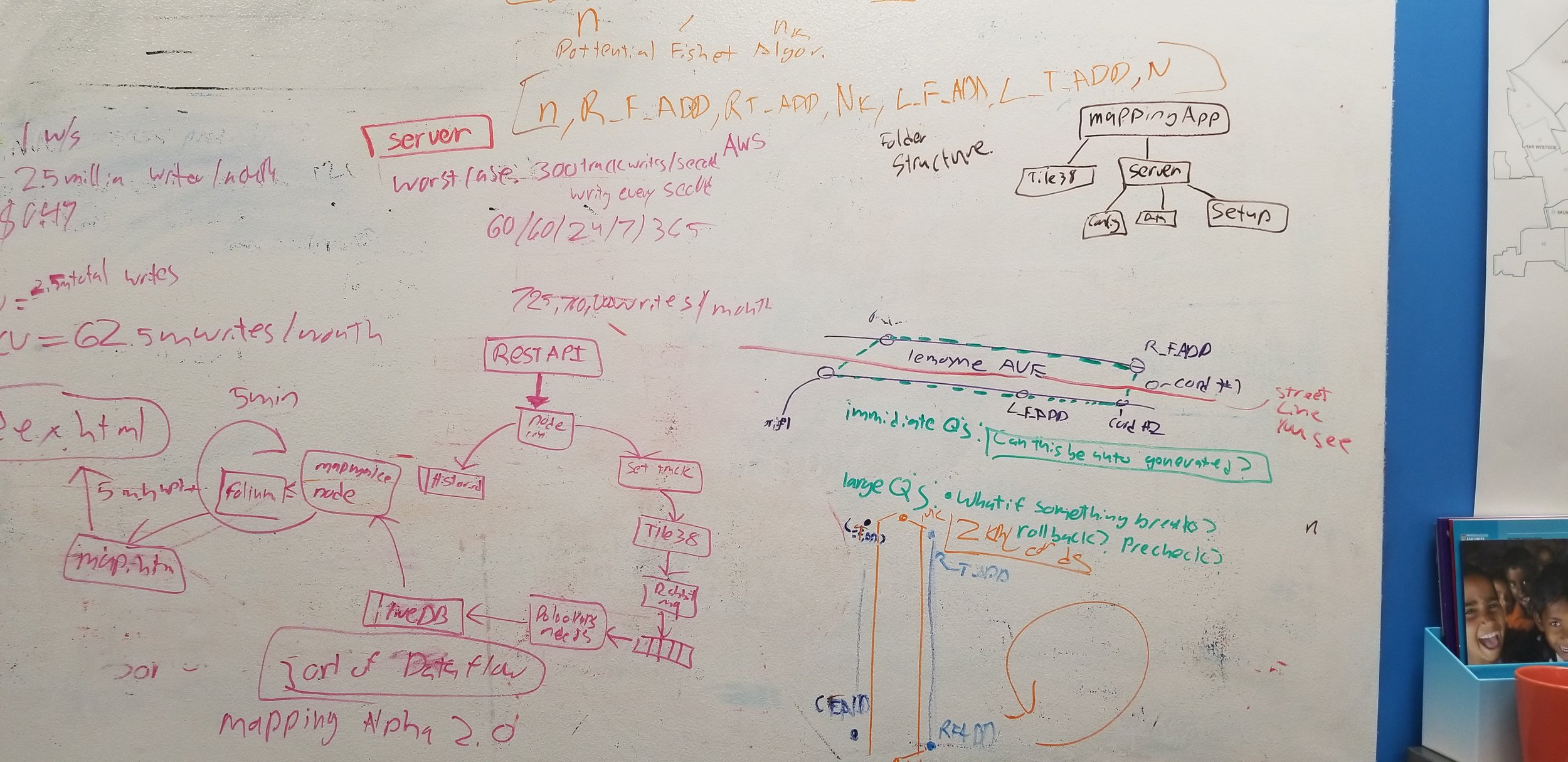

Above: planning of snow plow map architecture and processing.

Development:

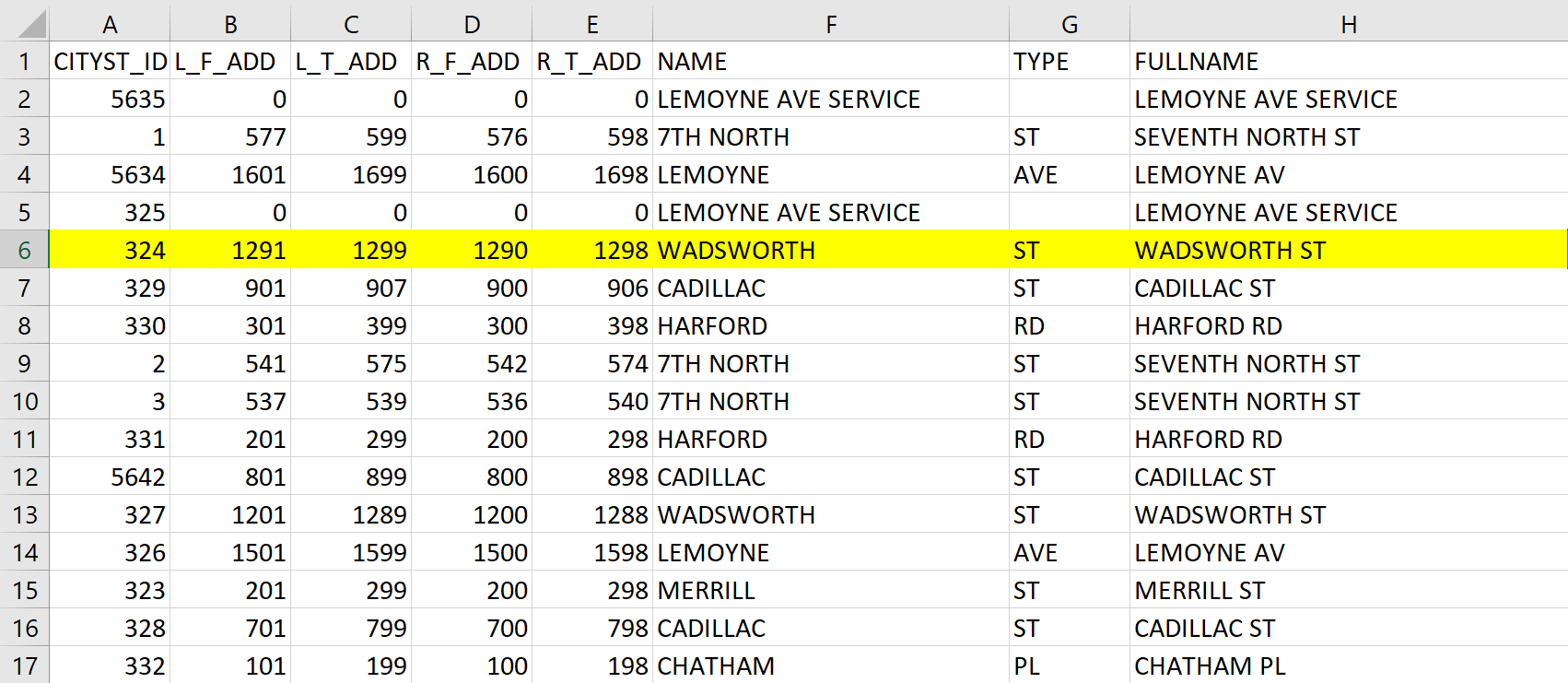

The development process took the form of a modified agile workflow with small proof of concepts being produced and eventually combined for a larger product. The development process was also an experiment for using Amazon’s AWS(EC2) as a development environment alongside using other Amazon services for the stack: DynamoDB(NoSQL) for the database and S3 for static site hosting (Like Google Drive, or Github). The first step using the City Streets 2011 KML (file format used to display geographic data) found at data.syrgov.net was to convert the four corner street addresses of a block for a CITYST_ID block into usable geocoordinates (geocoding). This created 4 unique points that could be combined to form a polygon shape for a specific city block. Then those pairs of points would be stored in arrays per CITYST_ID.

THE STREET ADDRESS FOR EACH CORNER IS CREATED BY THE COMBINATION OF L_F_ADD/R_F_ADD/L_T_ADD/R_T_ADD + NAME + TYPE COLUMNS IN THE KML FILE. FOR EXAMPLE L_F_ADD’S ADDRESS FOR THE CITYST_ID 324 IS 1291 WADSWORH ST.

This process allowed the creation of visualizations of the geofences and filtering of them before they were implemented into a larger program. The program was designed so that the geofences were fed into a Geofencing server, Tile38, then sent to a message broker, RabbitMQ. From there, a python program would listen to the broker to make updates to a DynamoDB database. This design for the geofencing was too complicated to bring to fruition.

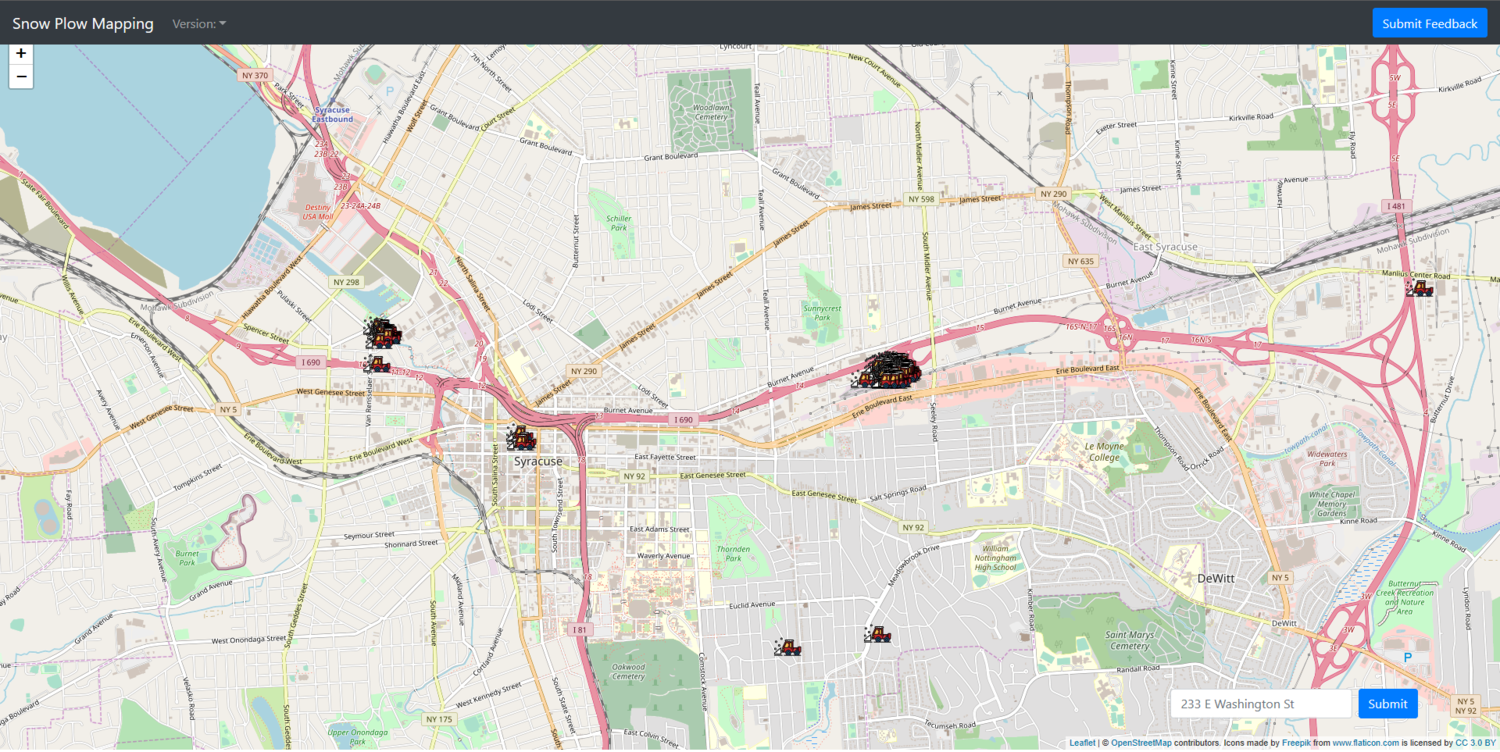

Above: Filtered geofences displayed using leaflet in Chrome web browser.

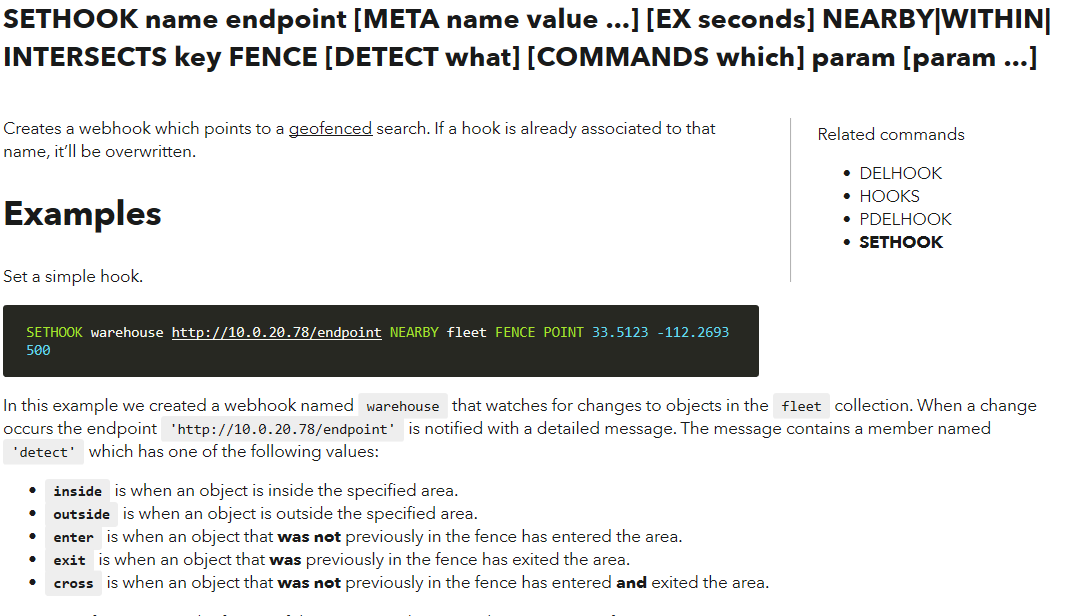

In order for the system to map the street data coming from the server-side messages to the front end, the system must establish a universal primary key: CITYST_ID from the initial KML file. Within the DynamoDB database, the live street data had its own table with the primary key of CITYST_ID. The idea was Tile38 would have webhooks named after the CITYST_ID of the geofence they represented. So when the webhook was triggered the system would know a vehicle was in that geofence.

Above: Screenshot from the Tile38 documentation on webhooks. HTTPS://TILE38.COM/COMMANDS/SETHOOK/

Another part was getting the data from the City’s Snow & Ice vehicles (this includes snow plows). This was fulfilled by a python function that called all of the snow plow trucks, stored their data into an array, then created a minified JSON file of that data, and transferred it to the S3 bucket (similar to a folder in Google Drive). The client browser would load that and then store it into memory, reducing the resources required for initial access.

1

{"@type": "PagedGpsMessageResult", "index": 0, "limit": 1000, "count": 121, "total": 121, "gpsMessage": [{"messageTime": "2018-10-12T14:31:36Z", "satellite": false, "latitude": 43.055431, "longitude": -76.108658, "accuracy": {"@units": "MILES", "value": 0.006213711922373339}, "odometer": {"@units": "MILES", "@timestamp": "2018-10-12T14:31:36Z", "value": 2079.85}, "keyOn": false, "parked": false, "lastSpeed": 0, "avgSpeed": 0, "maxSpeed": 0, "vehicleId": 951175}, {"messageTime": "2018-10-12T15:06:51Z", "satellite": false, "latitude": 43.055716, "longitude": -76.10752, "accuracy": {"@units": "MILES", "value": 0.006213711922373339}, "odometer": {"@units": "MILES", "@timestamp": "2018-10-12T15:06:51Z", "value": 94543.3}, "keyOn": false, "parked": false, "lastSpeed": 0, "avgSpeed": 0, "maxSpeed": 0, "vehicleId": 955670}}]}

Above: Example of a vehicle location JSON message.

At one minute intervals the server side portion of the system would call the Vehicle Tracking API to retrieve the location of the Snow & Ice vehicles. Once the data is retrieved, the VehicleID, Latitude, Longitude, MessageTime*, and currentEpochTime** for each vehicle would be uploaded to the Historical Vehicle Location table in our DynamoDB instance to create a historical record that could be reviewed at a later time. At 10 minute intervals the system would upload the API response as a JSON file to the AWS S3 bucket so anyone viewing the website could see where the trucks were at. The front end would load this JSON file and create a Javascript map object (key value pair array) that utilizes the VehicleID as a key. In the map it used this structure [ Key: VehicleID, Value: Leaflet Marker Object].

- The time the vehicle tracking API received the message from the vehicle. **Unit way of keeping time within a system. It uses the seconds that have elapsed since 00:00:00 Thursday, 1 January 1970. It provides an easy way to sort dates without having to use other date formats(Ex. Jan. 1, 1970 00:00:00).

Once the backend architecture was taking shape, I moved onto the client side part of the application. The client side was designed to be light in terms of initial download. This created a few different consequences. While yes, the initial download would be an order of 75% lighter than a fully constructed foilium (leaflet map creation library) html file map, it generates all of its objects on the users device except for some assets stored on Content Delivery Networks (servers around the globe providing high speed access to media or other assets). In all tests I conducted this method was faster. Loading a completed map (10mb-20mb file) would crash the chrome instance I was using, and freeze the machine, because browsers are not designed to download excessively large html files, but they do well with gradual streams of data such as media files.

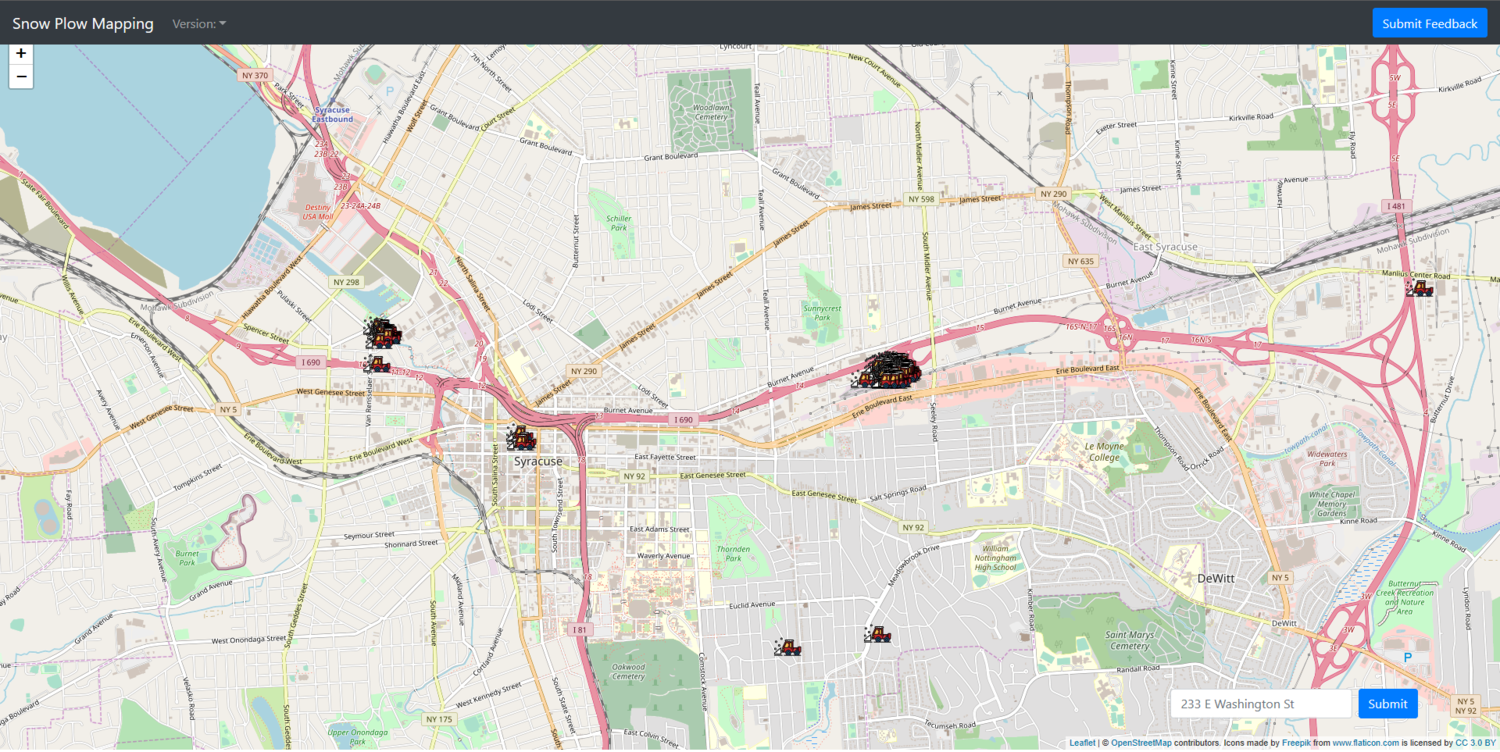

The front-end javascript used callbacks (functions that are passed as arguments to other functions) to load our vehicle data from a JSON file stored on the S3 instance. The vehicle location data would be stored inside a map structure that associates vehicleID with a leaflet marker object for that ID. Ideally the program would do that same process for street data, but that did not happen. The application also had a textbox where the user could enter their Syracuse street address, and then it would mark that address on the map, and zoom in; similar to Google Maps. This used the OpenStreetMap API to convert an address to geographic coordinates.

Above: the proposed client.

Deployment:

The application was developed using Python which does not natively compile into a distributable binary (Example: Window’s EXE), unlike languages like Java(jar) or C. To combat this I created an image of the machine I was working on and used that for deployment. The application’s multiple external dependencies made it hard to distribute as well, because they all needed extensive setup processes to enter in all relevant API keys and passwords.

The Current Solution:

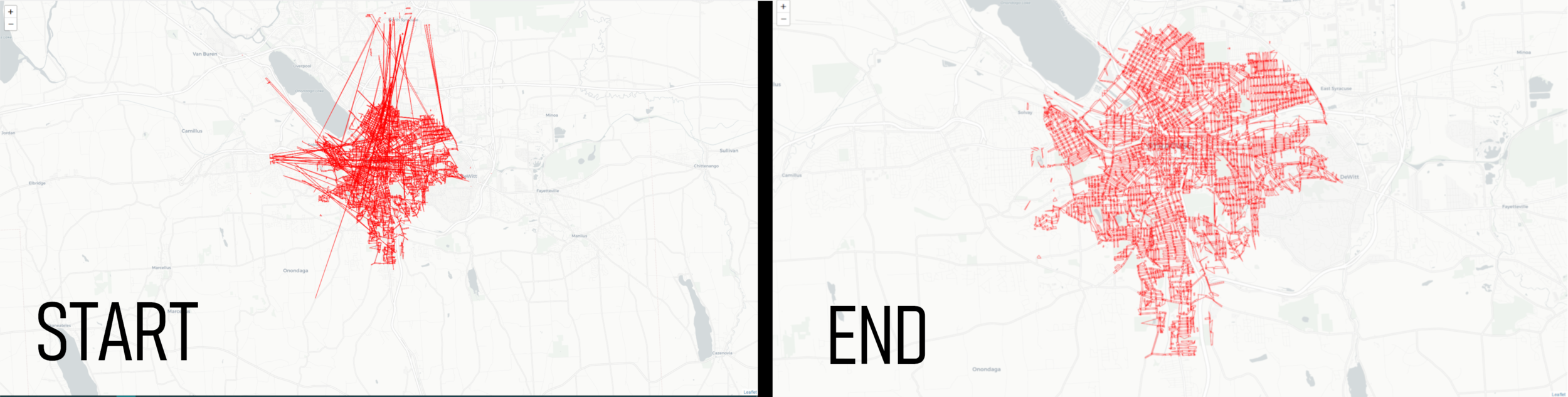

After presenting my work to Sam Edelstein, the City’s Chief Data Officer, he was able to build a solution in R (statistical programming language). His solution utilized a 60 minute update cycle, and historical data. Each update pulls a 60 minute chunk of data from our vehicle tracking API. That point data is then fed into an API that can turn the points into direction data, similar to Google Maps. From there, that direction data is cross referenced with the City Streets 2011 shapefile to create a historical record for that 60 minute time frame of when streets were last plowed.

The snow plow map can be found at: https://cityofsyracuse.github.io/snowmap/snowmap/snowplow_map.html